The Google I/O 2024 event showcased AI models from small to large, signaling the future of AI infiltrating life.

At the Google I/O event in the early morning of May 15 (Hanoi time), Google introduced a series of AI products, including large language models (LLM) with tens of billions of parameters, to smaller models that can run locally on devices for end users.

Gemini is available on most services

Google announced Gemini 1.5 with a host of new features, including the ability to analyze text, code, video, and audio recordings of longer durations. This LLM is currently available in multiple versions, serving a variety of purposes.

Gemini 1.5 Pro will become a multi-purpose assistant in Workspace, able to pull information from any and all content in Drive, write emails that incorporate information from the document you're viewing. The model will be brought to Google Docs, Sheets, Slides, Drive, and Gmail next month, but will only be available to paid users for now.

Gemini 1.5 Flash is a new multi-modal model that is as powerful as Gemini 1.5 Pro but is "optimized for narrow, high-frequency, low-latency tasks" - a factor that makes this AI respond quickly. However, the products that will integrate this model have not been revealed.

Gemini Live is a feature that allows users to interact with their smartphones using natural voice. Instead of waiting for a question and answer sequence, users can interrupt the AI and get an immediate response. The AI can also “see” through the camera and provide feedback, such as solving math problems or providing information about objects in photos.

Gemini Nano is the smallest model, integrated by Google into the Chrome browser for desktop computers since version 126. For now, this AI runs support features in Workspace Lab or generates text for social media posts, writes product reviews quickly.

Google is also bringing Gemini Nano to Android. With this AI, Google says Android smartphones can help users avoid scam calls by detecting red flags, such as common scammers' conversation patterns, and then providing real-time warnings. This feature will be detailed later this year.

Gemini will also soon allow users to ask questions about videos on screen and will respond based on automatic captions, according to Google. Paid Gemini Advanced users can also import PDF files and AI will provide information.

Gemini is also deeply integrated into Google Search. This AI does not simply return information with citation links, but can do more such as answering queries in natural language, related information, suggesting new content based on search keywords.

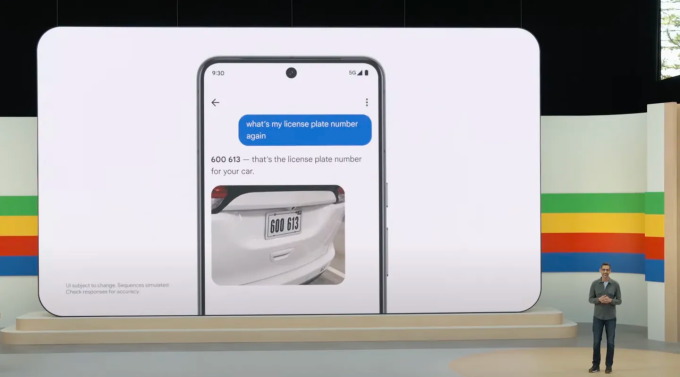

On the search engine, Google also added Gemini to serve the photo screening ability, called Ask Photos. This feature allows users to research the Google Photos library, then answer the user when asked. For example, CEO Sundar Pichai demonstrated the feature by asking Gemini what his license plate number was. The answer was a series of numbers, but with a photo attached so he could be sure the answer was correct.

Along with text processing, Google also offers the Gemini Imagen 3 image generation model. This AI is capable of understanding commands more accurately than the previous model, creating images with "astonishing levels of detail", realistic, lifelike images with less distracting details in the image than previous models, while reducing the problem of errors and false effects when generating images. Google claims that Imagen 3 is "the most efficient image generation from text to date".

Google is also rolling out a custom chatbot builder called Gems. Like OpenAI’s GPT, Gems lets users give Gemini instructions, allowing them to customize its responses and expertise. For example, if a developer wants it to be a running coach and sticks to that goal, the AI will suggest steps to take. However, this feature is only available to Gemini Advanced subscribers.

Google Maps will also get smart features thanks to Gemini and the Places API. Developers can use these tools to summarize information about places and neighborhoods in their apps based on training data of 300 million place reviews. This saves developers time by eliminating the need to manually write descriptions of places they want to embed in their apps.

In addition, Google said Gemini will gradually replace Google Assistant to support deeper user interactions. This AI will also provide faster, more informative and more interactive answers than the Assistant virtual assistant.

Project Astra - the future like Star Trek

At the event, Google introduced Astra - a multimodal AI that the company hopes will become a "do-everything" virtual assistant. Using a smartphone camera, Astra can see and understand what it sees, remember where things are, and do things for users. Google said the goal of the tool is to be the "most honest and best" AI agent.

“It doesn’t just talk to you, it actually does things on your behalf,” says a Google representative.

Veo - Google's answer to OpenAI's Sora

Demis Hassabis, CEO of Google DeepMind, also introduced Veo at the event, an AI that can create "high-quality" 1080p videos with a variety of visual and cinematic styles. This is considered a new rival to Sora.

Specifically, the AI is capable of understanding natural language and can “accurately capture the tone of a prompt,” creating videos that closely reflect the user’s creative vision. The model also understands cinematic terms like “timelapse” video or “aerial landscape photography,” and can create a coherent and coherent scene, with human subjects, animals, and objects moving realistically throughout the shot.

At the event, Google demonstrated videos that were about eight seconds long. However, the company said users could request to extend the duration to 1 minute and 10 seconds, as well as tweak the results with additional prompts. That's up from the one-minute maximum that OpenAI Sora had previously announced.

Still, like many other video and photo-generating AIs, neither Veo nor Imagen 3 has been widely released yet. The company plans to bring some of Veo’s features to YouTube Shorts and other products in the future.

Gemma 2 with 27 billion parameters

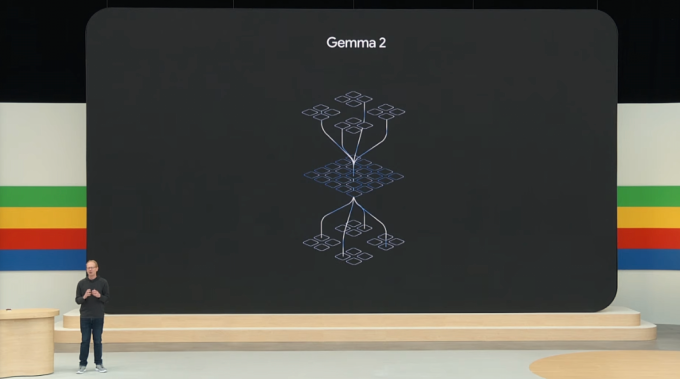

Gemma 2 is a major upgrade from the previous Gemma, which only had two versions with 2 billion parameters and 7 billion parameters. The model has been optimized to run on Nvidia's next-generation GPUs, Google Cloud TPU servers, and the Vertex AI service.

However, Google did not share details about the model beyond an announcement from Josh Woodward, VP of Google Labs, that Gemma 2 is “twice as powerful” as open-source models like Meta’s Llama and Mistral AI’s Mistral. Gemma 2 will be available in June.

TH (according to VnExpress)