About 61% of passwords can be cracked in less than a minute with the help of AI, nearly three times higher than traditional cracking methods.

Statistics released by Kaspersky researchers at the CSW 2024 security conference last week showed that the risk of password exposure is increasing even though users are aware of setting strong passwords, in the context of AI development and being exploited for malicious purposes.

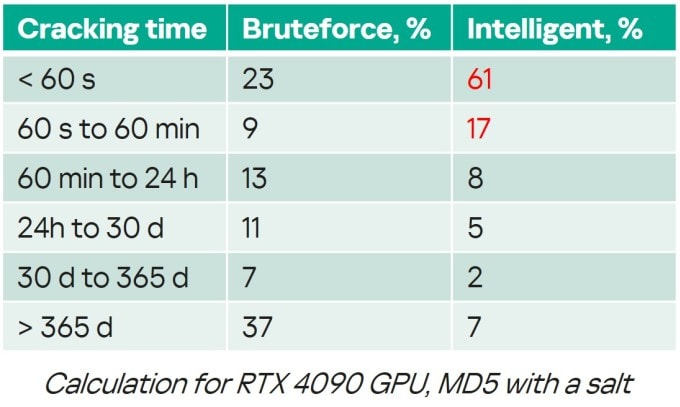

Nowadays, passwords are usually created by users in plain text, then protected by using a hash function to create a string of characters that is almost impossible to read or reverse. To detect, one method used by hackers is brute-force, which means running character strings through until the password is hit. Using this method, about 23% of passwords can be detected in under 60 seconds, 9% can be cracked in 1-60 minutes, according to Kaspersky research.

However, attackers can easily build their own AI models to improve their guessing ability, even reverse-engineering encrypted passwords into their original characters, taking advantage of databases of previously leaked passwords. For example, the 10 billion password dataset shared in July became a large source of data for these models.

Alexey Antonov, head of AI research at Kaspersky, said his team trained an AI model to analyze leaked passwords and found a significant increase in cracking speed. About 32% of passwords could be recovered from hashed form in under 60 minutes.

When running the AI application on RTX 4090 GPU hardware and MD5 hashing at 164 billion hashes per second, 61% of passwords were cracked in 60 seconds and 17% were cracked in 1-60 minutes, three times higher than the old-fashioned brute-force method.

Users' password habits are one of the factors that make it easier for AI to crack. Passwords that use related characters or common character strings like "admin", "password", "qwerty12345", "nguyen" are all in hackers' "dictionaries", making them easy to find. Set long passwords that contain letters, numbers, special characters, or use expert-recommended random password generators to reduce the chance of being cracked.

Increasing risk of cyber attacks in the AI era

As AI is increasingly applied to life and work, attackers also have many new methods. "AI is a powerful tool, but our enemies also use it," Antonov warned. That's why this year's CSW security conference focuses on the risks from AI.

According to this expert, in addition to using AI as a tool to find passwords faster, or using AI generators like ChatGPT to write malicious code and automate attacks, criminals can also use AI to create non-technical attacks on users.

Typical of these are exploiting AI to generate text, images, audio, and video to generate fake content, deepfakes, and scams. One of the most sophisticated attacks occurred in February in Hong Kong, when a scammer used deepfake to impersonate an executive and conduct an online meeting, convincing a finance employee to transfer $25 million.

Kaspersky statistics show that 21% of phishing emails are now generated by bots. In addition, during major events such as Halloween, Black Friday, and New Year, the amount of AI content increases sharply, showing that attack groups are taking advantage of the technology to be able to spread phishing content on a large scale.

At a security conference in May in Vietnam, regulators and experts also said that there should be legal regulations for AI developers, in the context of the increasing risk of cyber attacks in the AI era.

Lieutenant Colonel Nguyen Anh Tuan, Deputy Director of the National Population Data Center - Ministry of Public Security, gave an example of AI being able to create many applications with fake images and names of apps and websites of the Ministry of Public Security to lure people to download, install and fill in information such as citizen identification numbers and login passwords. He proposed that it is necessary to soon complete the legal corridor on AI to stay ahead of the trend, and at the same time issue documents regulating ethics in the process of developing, producing, applying and using AI, such as protecting privacy, personal data, human rights, of domestic and foreign units.

According to Deputy Minister of Information and Communications Pham Duc Long, AI is reshaping almost every industry, including network security. AI is being used on both sides of the network attack and system defense. He recommends that organizations need to apply AI to enhance network security, take advantage of the ability to analyze large data sets at high speed, filter large data to identify abnormal behavior, and detect malicious activities that traditional technology cannot handle.

TB (according to VnExpress)