Along with the development of technology, fraud using AI is becoming more and more sophisticated and cunning.

Having not seen each other for a long time, when she received a message via messenger from an old friend asking to borrow money for medical treatment, Ms. HB (Hanoi) hesitated a bit. Before she could refuse, the friend on the other end of the line pressed the video call button. The image was flickering, the sound was intermittent due to weak phone signal, but Ms. B, having 'witnessed' her friend begging to borrow money for medical treatment, felt secure in transferring nearly a hundred million VND to her friend. It was only when she received a warning from everyone that Ms. B was shocked: Her friend's Facebook account had been hacked, and the "friend" in the video call to her was just a "virtual person", a product of artificial intelligence (AI).

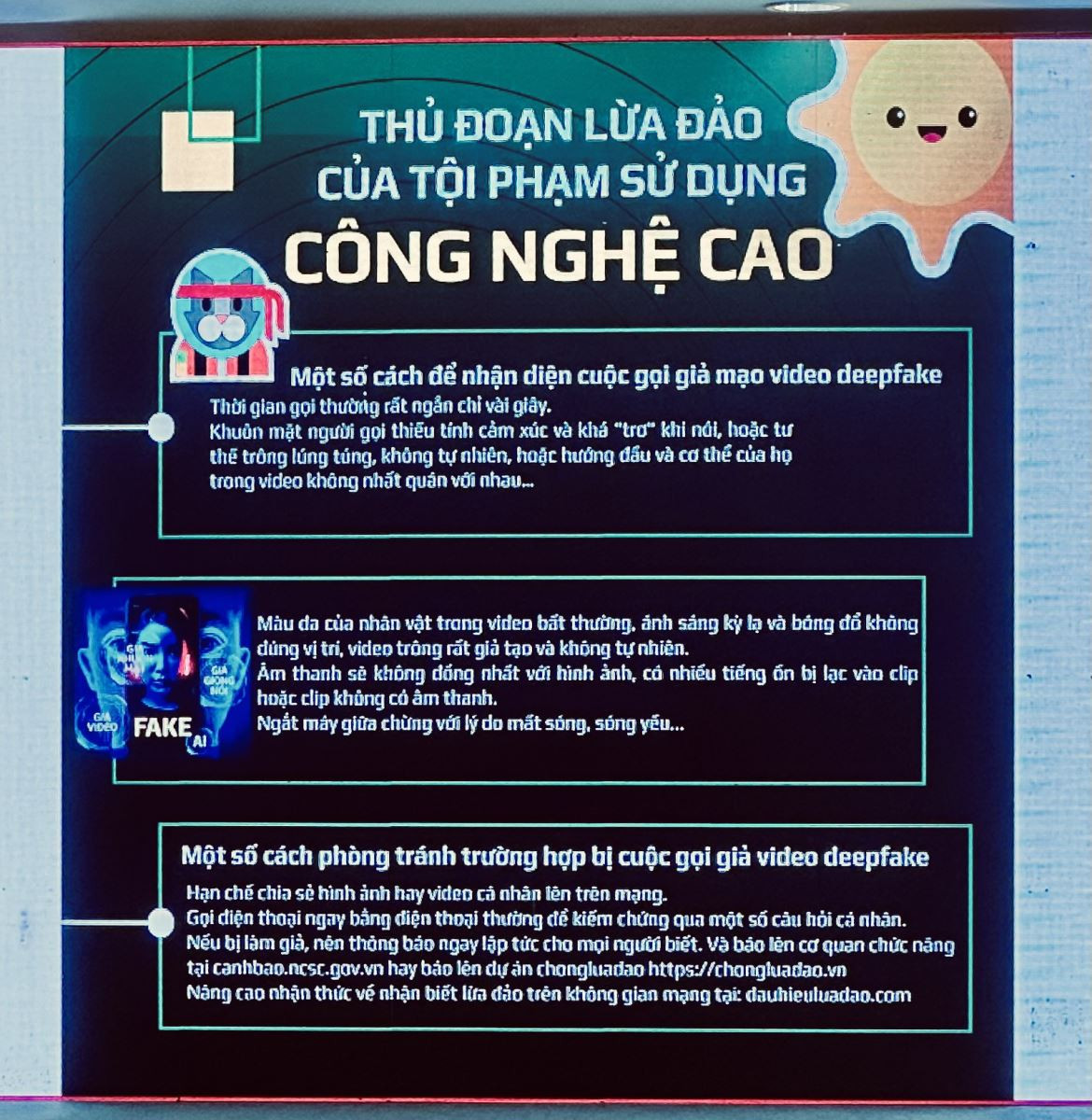

Along with the rapid progress of science and technology, artificial intelligence is also developing very quickly, becoming smarter and more perfect every day. The obvious benefits of AI are undeniable, such as: Increasing predictability, saving labor, stimulating boundless creativity, blurring language boundaries, personalizing data... However, there are also many negative aspects that AI brings, and one of them is that AI becomes a tool for criminals to apply fraud. High-tech criminals use deepfake AI (face and voice merging software), exploiting images, facial expressions through the victim's documentary images, thereby creating photos and even video animations that look exactly like the victim's acquaintances to commit fraud.

Globally, cybersecurity companies have recorded: In 2023, the number of deepfake scams globally increased 10 times compared to before; in which North America increased by 1,740%. There were even deepfake cases where victims transferred up to 25 million dollars to criminals, because the images created by AI were so realistic that they could not be distinguished.

In Vietnam, from the second half of 2023 until now, Bkav Group has continuously received reports and requests for help from victims nationwide about fraud cases using deepfake methods. In many cases, bad guys have controlled Facebook accounts but did not immediately take over completely, but secretly monitored and collected user information, waiting for an opportunity to pretend to be victims to ask their friends and relatives to borrow money. They used AI to create a fake video of the face and voice of the Facebook account owner. When asked to make a video call to verify, they agreed to accept the call but then quickly disconnected. Many people, like Ms. HB in this article, have fallen into the trap of scammers. It is not possible to count the total number of people and the amount of money scammed by AI tricks, but it is certainly not a small number. Frauds leave huge losses for individuals, families, and society, and are even more pitiful when the victims are often the elderly or those with little access to social networks, or even people in very difficult circumstances.

In recent times, information technology and network security experts have continuously issued recommendations to people to be cautious when making financial transactions online, and provided instructions on how to distinguish between real and fake when receiving phone calls, video calls, clicking on strange links or joining groups, associations... on social networks. People in general and social network users in particular are also advised to be very careful when sharing images and personal information, to avoid being exploited and taken advantage of by criminals. More than ever, vigilance and the phrase "smart user" have become so necessary for every citizen participating in financial transactions online.

However, calling on users to be cautious and vigilant is not enough. This is the time when authorities need to demonstrate more strongly their role in protecting people's rights and fighting uncompromisingly against fraud.

The first step is to build barriers to electronic transaction regulations to limit suspicious transactions (recently, regulations on transfer limits or information on the application of biometrics in banking transactions are an example).

Next, there needs to be a strong response to those who illegally exchange, buy, sell, and exploit users' personal data. In reality, in addition to some users who accidentally reveal or leak information when using social networks or in daily life, there are signs that criminals have had the opportunity to exploit personal data without the owner's permission. Providing user information to criminals needs to be investigated and handled more strictly.

Next, is to pursue and bring the fraudsters to justice, demand compensation for the victims and at the same time face severe penalties.

Only then can we somewhat effectively deter and prevent fraudulent crimes as well as the act of providing users' personal data; at the same time, strengthen people's trust in participating in a healthy, safe and effective digital environment.